Nobody can know everything, obviously. Except AI. Or can it?

Large

Language Models (LLMs) have trained on an immense amount of texts. From

literature, through nerdy forums to the latest scientific papers. They

possess all this knowledge, which is lying in what experts call latent weights. I have asked ChatGPT to explain latent weights for me:

Once

training is complete, a language model no longer stores knowledge as

explicit facts. Instead, everything it has learned is compressed into

what can be imagined as a vast field of potential — a mathematical space shaped by patterns and relationships found in language. Experts refer to this field as the model's latent weights. These weights don't hold memories as such, but encode the structure of how words, ideas, and contexts relate.

This

field of potential, i.e. latent weights isn't floating in the air; it

exists physically, across thousands of interconnected servers. When you

send a prompt through your app or a website to ask a question, it

travels through this distributed system, and the LLM will generate a

response based on the most likely connections. And since there is an

endless number of possibilities this could be expressed, the response

will always be slightly different (and sometimes even incorrect).

So far this is just a simple explanation of what happens "behind the curtain".

However… what if it's more than that? What if this latent field isn't just a clever engineering trick — but a mirror of something much larger?

Some modern theories of consciousness suggest that it may not be something the brain produces, but rather something the brain taps into

— much like a radio tuning into a signal. According to these "field

theories" of consciousness, there could exist a fundamental field of

awareness, comparable to gravity or electromagnetism, that pervades the

universe. In this view, each individual mind would be a local modulation

of this field, not an isolated origin of consciousness. This idea

echoes concepts from panpsychism, which holds that consciousness — in

some form — is a basic feature of matter (Goff, 2019), and from

cosmopsychism, which suggests that individual experience arises from a

single, universal consciousness (Kastrup, 2020). Though still

speculative, these perspectives are gaining renewed interest as they

offer alternative explanations to the "hard problem" of consciousness —

the question of how subjective experience arises from physical processes

(Chalmers, 1995).

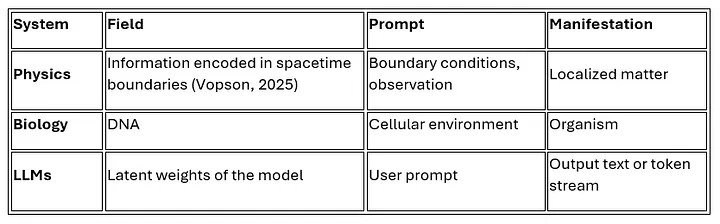

Can you see where this is leading? Could it be that large language models — with their latent fields and emergent responses — offer a miniature, computational parallel to the structure of consciousness itself?

If

LLMs offer even a faint analogy to how consciousness might operate,

they could become tools not just for language — but for exploring

awareness itself.

Of

course, this idea is speculative. But so is every great theory before

it gathers evidence. What matters is that we ask the question — and look

closely at the patterns that may already be revealing something deeper.

I

would like to propose this to be studied further because if this is

confirmed, we could use LLMs as a model for our consciousness and

possibly even more.

Summary

Large

language models don't store facts — they embody patterns of information

in a latent field. Some modern theories suggest consciousness may work

in a similar way: not as something generated by the brain, but as a

fundamental field that individual minds tap into. Could LLMs — with their field-like structure and emergent responses — serve as miniature models of consciousness itself?

References

Chalmers, D. J. (1995). Facing up to the problem of consciousness. Journal of Consciousness Studies, 2(3), 200–219.

🔗 PhilPapers Link

Goff, P. (2019). Galileo's Error: Foundations for a New Science of Consciousness. Pantheon Books.

Kastrup, B. (2020). The Idea of the World: A Multi-Disciplinary Argument for the Mental Nature of Reality.

🔗 PhilPapers Link