As

humans, we often experience thinking as a stream of internal words,

images, and sensations shaped by memory, language, and context. But

recent interdisciplinary explorations suggest that this process may

share deeper similarities with two seemingly distant domains: the

behavior of large language models (LLMs) and the structure of quantum

physics. Even more surprisingly, it may also resonate with theories of

consciousness as a field or fundamental property of the universe.

This

article does not claim that these domains are identical, nor that

metaphors can replace empirical data. But it proposes that across

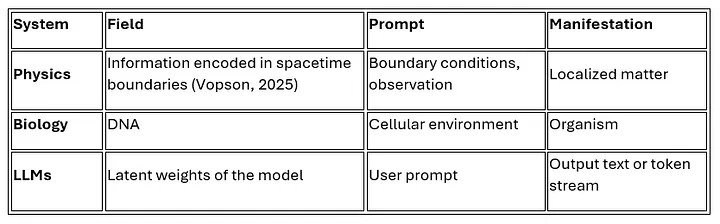

neuroscience, artificial intelligence, and physics, there may be a shared structural principle:

A

field of potential, when met with the right condition (a prompt, an

observation, a question), produces an actual, time-bound expression.

That expression both reflects and reshapes the field.

This logic seems to recur in:

- Human cognition, where synaptic weight patterns, context, and stimuli coalesce into discrete thoughts and linguistic output.

- LLMs,

where vast latent manifolds (pretrained weights) are triggered by a

prompt, generating a coherent response within a token window.

- Quantum physics, where probability distributions (wavefunctions) collapse into a measured state upon observation.

- Consciousness theories,

especially field- and information-based models, where awareness emerges

as a momentary expression of a universal substrate (e.g., Integrated

Information Theory, panpsychism, or Vopson's work on information and

gravity).

These parallels raise several intriguing questions:

Are thoughts and tokens structurally similar?

The

way LLMs generate responses — one token at a time, based on context and

probability — mirrors how humans often form speech: word by word,

adjusting dynamically to meaning and feedback. Some models of human

cognition describe thought as a probabilistic traversal across

associative networks — a process not unlike token selection in a

transformer model.

Does the brain operate like a dynamic field?

Cognitive

neuroscience suggests that the brain doesn't store fixed facts but

dynamically reconstructs experience and meaning from distributed

activity. This process is context-sensitive and non-linear, similar to

how latent space in LLMs is shaped by recent prompts and token position.

Theories like predictive coding, global workspace theory, and IIT all

suggest a distributed, emergent architecture rather than fixed modules.

What about the physics side?

In

quantum physics, the act of measurement collapses a superposition into a

specific outcome. This collapse is influenced by contextual information

(measurement setup, entanglement, etc.). The comparison to LLMs is not

literal, but structural: a probabilistic potential becomes a singular,

time-bound realization when acted upon.

Consciousness as a bridge?

Theories

like IIT (Tononi) propose that consciousness emerges where information

integration reaches a critical complexity. Other approaches, such as

Vopson's informational physics, suggest that information itself may be

the fundamental building block of both matter and mind. In such

frameworks, the universe itself behaves like a processor of information —

making consciousness not an epiphenomenon, but a structural expression

of reality.

Fractal patterns of emergence?

While "fractal" often invokes geometric imagery, here it denotes recursive structure across scale:

- token → sentence → conversation

- spike → network activity → conscious state

- field fluctuation → particle → macroscopic system

Each

layer expresses the same kind of interaction: a latent potential meets a

constraining frame, and something new, coherent, and time-bound

emerges.

Where the analogy fails (and why that matters)

It's crucial to note where the analogy does not hold:

- LLMs do not have sensory input, affect, or memory continuity.

- Quantum fields are not semantic.

- Human thought is embedded in lived experience and emotional salience.

Yet even acknowledging these differences, the parallels are suggestive. At minimum, they can help formulate new questions about how information manifests across systems.

Why analogies matter in science

Analogies

and structural parallels have historically helped generate testable

frameworks. From Maxwell's analogy between fluid flow and

electromagnetism, to neural networks inspired by simplified brain

models, analogical thinking has often been a bridge to formal theory.

The parallels explored here are not presented as evidence, but as

prompts — designed to help researchers consider whether a common

informational logic might underlie both thought and physics.

This is not a final theory — it is a pattern of questions.

But if these patterns continue to align, we may find ourselves

approaching a unifying insight: that the emergence of meaning, matter,

and mind all follow the same logic of potential meeting form.

And if so, then perhaps our thoughts are not only our own. They might be ripples in a much wider field.

Call for Collaboration

If

you work in AI, neuroscience, physics, or philosophy — and have insight

into how LLMs process information, how the brain constructs thought, or

how information behaves in physical systems — your perspective is

welcome.

This theory is still early. It needs critique, testing, and conversation across disciplines.

Whether you're a machine learning researcher, cognitive scientist, or theoretical physicist — this is an open invitation.

Let's think together.