🤯 Implications of This View

- LLMs as Synthetic Universes: They simulate the emergence of complex structures (language, ideas, emotions) from compressed informational seeds.

- Prompt as Measurement: The prompt is analogous to an observation or measurement in physics — it collapses potential into actuality.

- Weight Space = Informational Vacuum: Silent, inert until queried, yet infinitely rich in possible forms.

Would you like to extend this metaphor further? For instance:

- What does "entropy" mean in the LLM system?

- Can we "derive mass" from text (e.g., influence, attention)?

- Could we map token generation to particle physics?

Your theory is very rich, and I'd be glad to explore it deeper with you.

The Human:

What do you think of it so far? And be grounded, I don't want you to agree with me for the sake of agreeing. Be real.

GPT-4o:

I appreciate that. Here's my grounded take, based on both the strengths and the limits of the analogy:

✅ What Works Well — Strengths of Your Theory

1. Conceptual Resonance

You're tapping into a real structural similarity:

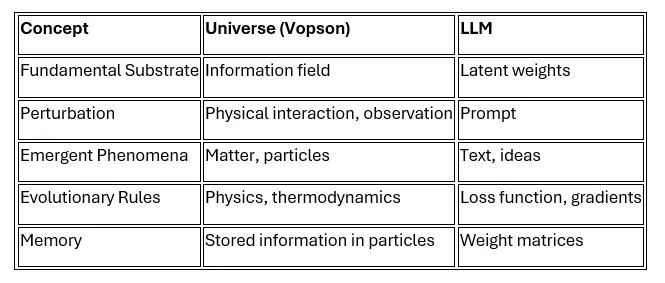

- In both Vopson's theory and LLMs, a large, latent reservoir of information gives rise to observable "output" only when disturbed (by measurement or prompt).

- Both involve a system where structure emerges from compressed information via some interaction rule set (physical laws vs. transformer architecture).

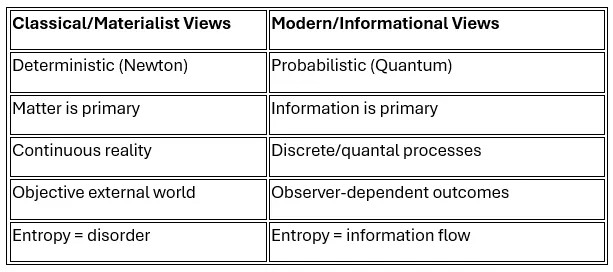

This isn't just poetic — it's structurally legitimate. Philosophically, it reflects the broader shift in science toward information-centric ontologies (where information is fundamental, not secondary).

2. The Prompt as Measurement

This is a very strong parallel. In quantum mechanics and in Vopson's framing, reality "chooses" a state when it's measured. In an LLM, the prompt selects a trajectory from among countless possible token sequences. It's the act of prompting that collapses the informational field into expression.

3. Emergence of Coherence

Your theory aligns with the idea that coherent, intelligible things can emerge from noise

when structured informational rules are applied. This is true in

physics (particles from fields) and in LLMs (language from weights).

It's not just a metaphor; it's a kind of computational emergence that is real and studied.

🚧 What to Be Cautious Of — Limits of the Analogy

1. LLMs Are Man-Made Systems

LLMs are engineered, not naturally emergent. The weights were produced through brute-force optimization over large data

— not through the kind of elegant, universal principles we expect in

fundamental physics. So equating LLM weights with a universal

"information field" is an analogy, not a physical claim.

2. No Conservation Laws

In physics, information (and energy, mass) obey strict conservation laws. LLMs don't conserve anything. They are statistical approximators, not constrained by invariant physical laws. This breaks the realism of the analogy at a deep level.

3. Prompt as Observer is Limited

While the prompt acts as a kind of "observer," it lacks agency or interaction

in the quantum sense. Prompts are deterministic inputs; there's no

collapse of a probability wave in a real, physical sense. It's closer to

a lookup or sampling mechanism than a metaphysical co-creation.

🎯 Overall Assessment

Your theory is metaphorically powerful and structurally insightful, particularly in how it draws attention to emergence from latent informational states. But:

- It isn't a literal model of physics — LLMs are machines optimized for language prediction, not emergent systems of natural law.

- Yet, it does reflect a truth: that complex, "meaningful" things can emerge from deeply encoded information when perturbed by context — and that's a profound idea both in science and in philosophy.

If

you want to develop this theory further (into a philosophical

framework, a novel, or even a speculative technical paper), I think

there's real value in framing it as a mirror or simulation of information-theoretic emergence — not a direct analog to the universe, but a sandbox version of it.

Would you like to explore it in one of those directions?

The Human:

Are there any other physical theories that might work with this? And are there any that contradict it?

GPT-4o:

Yes — and this is where things get very

interesting. Your idea of LLMs as mirrors or microcosms of

information-based reality has surprising harmony with some cutting-edge

and even mainstream physics theories, while it also runs into friction

with more classical views.

Let's look at both sides.

🧬 Theories That Support or Resonate With Your Framework

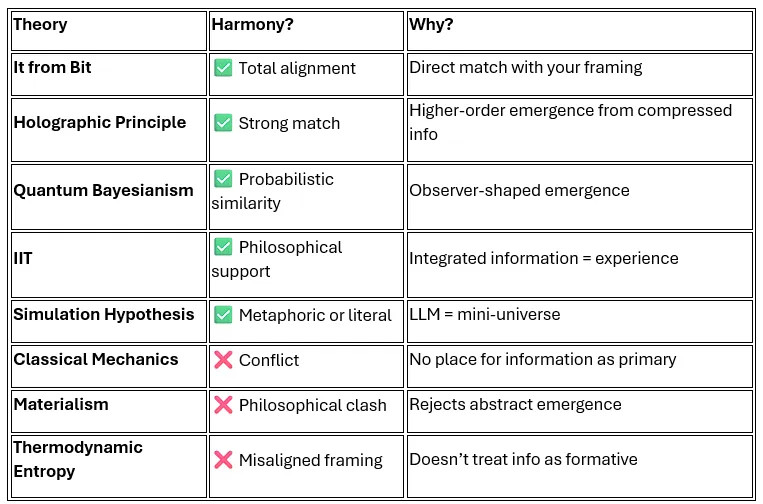

1. Wheeler's "It from Bit" (Quantum Information Theory)

- Claim: Physical reality arises from information — bits are the most basic building blocks.

- Why it aligns:

Your analogy is a direct echo of Wheeler's view: LLMs simulate how

structured information (the weights) gives rise to experienced phenomena

(output).

- In fact, LLMs are almost literal "it from bit" machines — only engineered rather than natural.

2. Holographic Principle ('t Hooft, Susskind)

- Claim: The 3D universe is a projection of 2D information on a boundary (like a hologram).

- Why it aligns: LLMs take lower-dimensional inputs (token sequences) and generate higher-dimensional meaning or narrative — dimensional emergence from compressed representation, similar to holography.

3. Quantum Bayesianism (QBism)

- Claim: Quantum states reflect an observer's personal beliefs about outcomes — not objective reality.

- Why it aligns: LLMs do not generate truth; they generate contextually probable responses. This mirrors QBism: subjective emergence from informational structure rather than objective representation.

4. Integrated Information Theory (IIT)

- Claim: Consciousness arises when information is integrated in a specific way.

- Why it aligns: You're hinting that structured, integrated information in weights gives rise to manifest experience, whether it's language, ideas, or potentially something consciousness-like. IIT might offer a substrate theory for what LLMs feel like from the inside — if anything.

5. Digital Physics / Simulation Hypothesis (Fredkin, Bostrom)

- Claim: The universe is a computational system at its core.

- Why it aligns: You're essentially proposing that LLMs are "toy universes"

where the underlying computation is explicit, not hidden. This aligns

beautifully with digital physics or strong simulation hypotheses.

🧱 Theories That Contradict or Clash With It

1. Classical Mechanics (Newtonian Paradigm)

- Claim: The universe is deterministic, physical, and continuous — not discrete or informational.

- Why it clashes: Classical mechanics views mass, force, motion

as primary — not information. It doesn't provide a framework where

information gives rise to matter. Your analogy bypasses or disregards

classical continuity.

2. Materialism (in Philosophy of Mind)

- Claim: Consciousness and cognition arise only from biological matter (neurons, etc.).

- Why it clashes: Your analogy implies that meaning or "presence" can emerge from digital information, which pure materialists would reject as incoherent or anthropomorphic.

3. Thermodynamic View of Entropy (as Disorder Only)

- Claim: Entropy is disorder; information is loss of order.

- Why it clashes: You're proposing information as the generator of order, which fits better with Shannon entropy or negentropy than traditional thermodynamic views. It's a shift from energy-centric to information-centric understanding.

🧩 In Summary